Last Week: 2025 AI Roadmap 🛣️

Next Week: Cloud Security Resources ⚔️ - Micro Break

This Week: How to run OpenAI’s GPT OSS locally

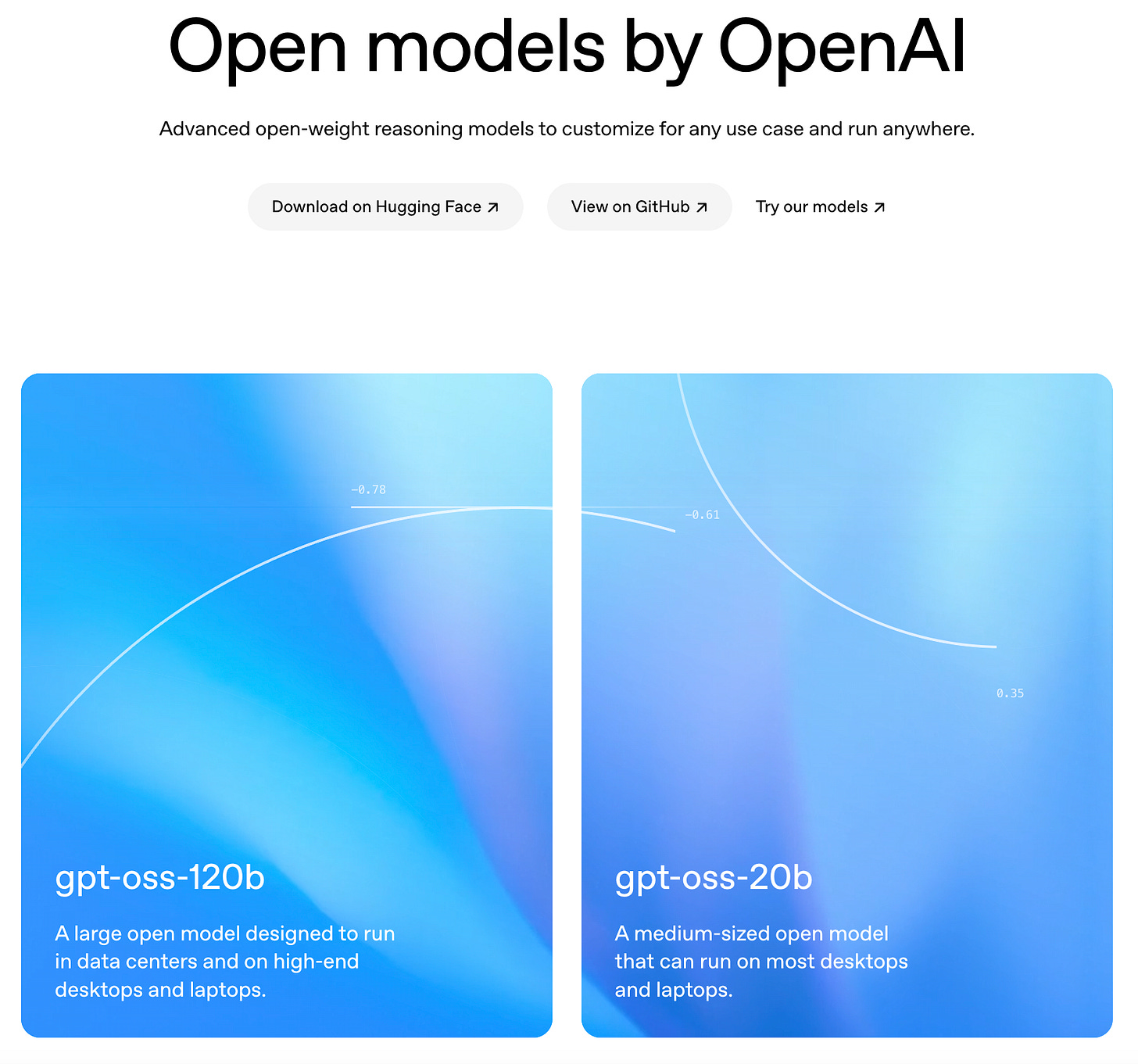

GPT OSS Locally: Two New Models You Can Run Yourself

Two new open source GPT models have just dropped. You can download and run them for free. No sending your data off to OpenAI or any other third party API, it all stays on your machine.

You’ll need some decent(ish) hardware. The smaller 20B parameter model is realistic for a well specced local setup. The monster 120B version demands more compute than most home rigs can handle, I’m guessing you don’t have a 80GB GPU (like NVIDIA H100 or AMD MI300X) If you do though, let me know!

Let’s do this

Step One:

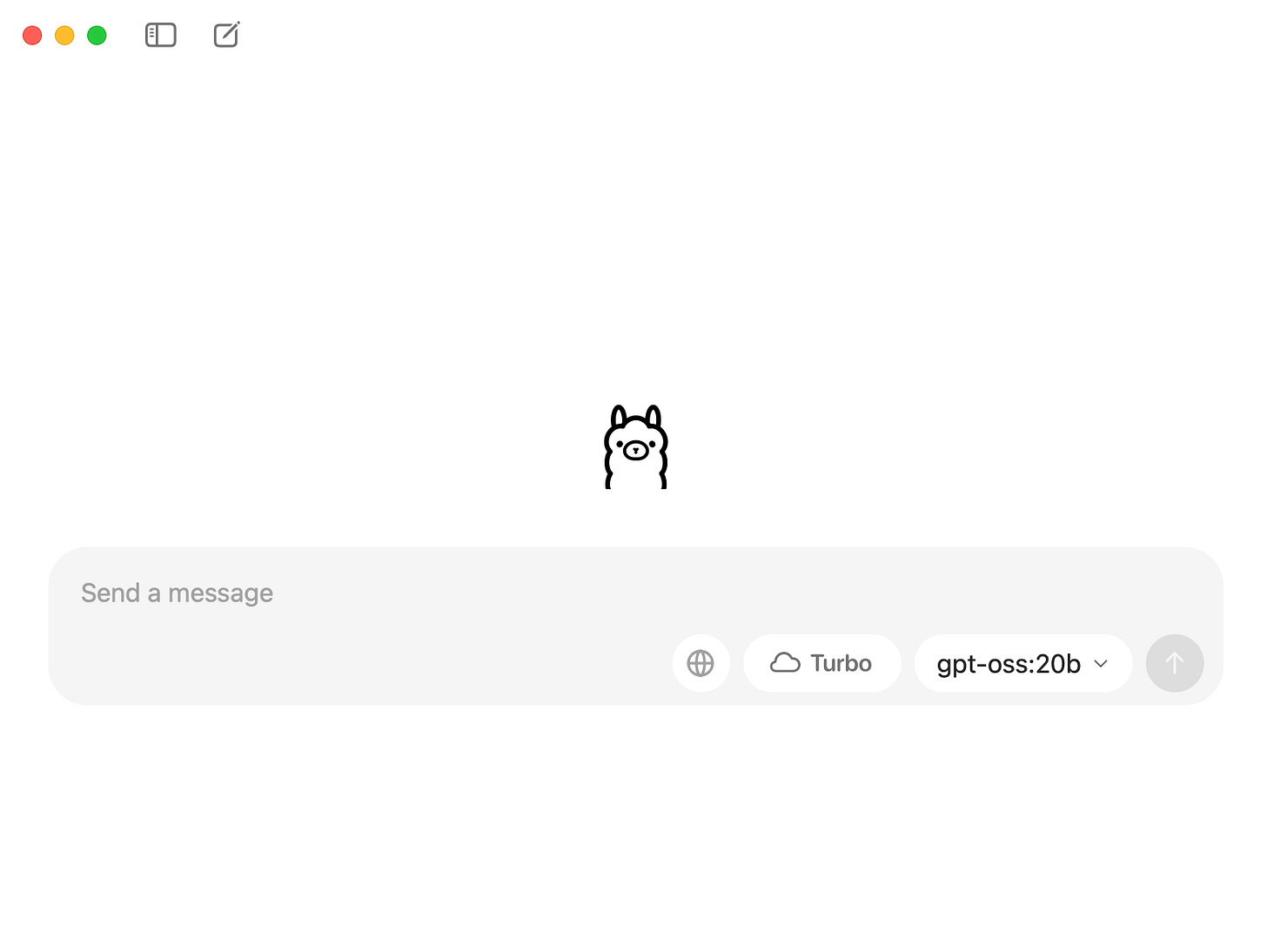

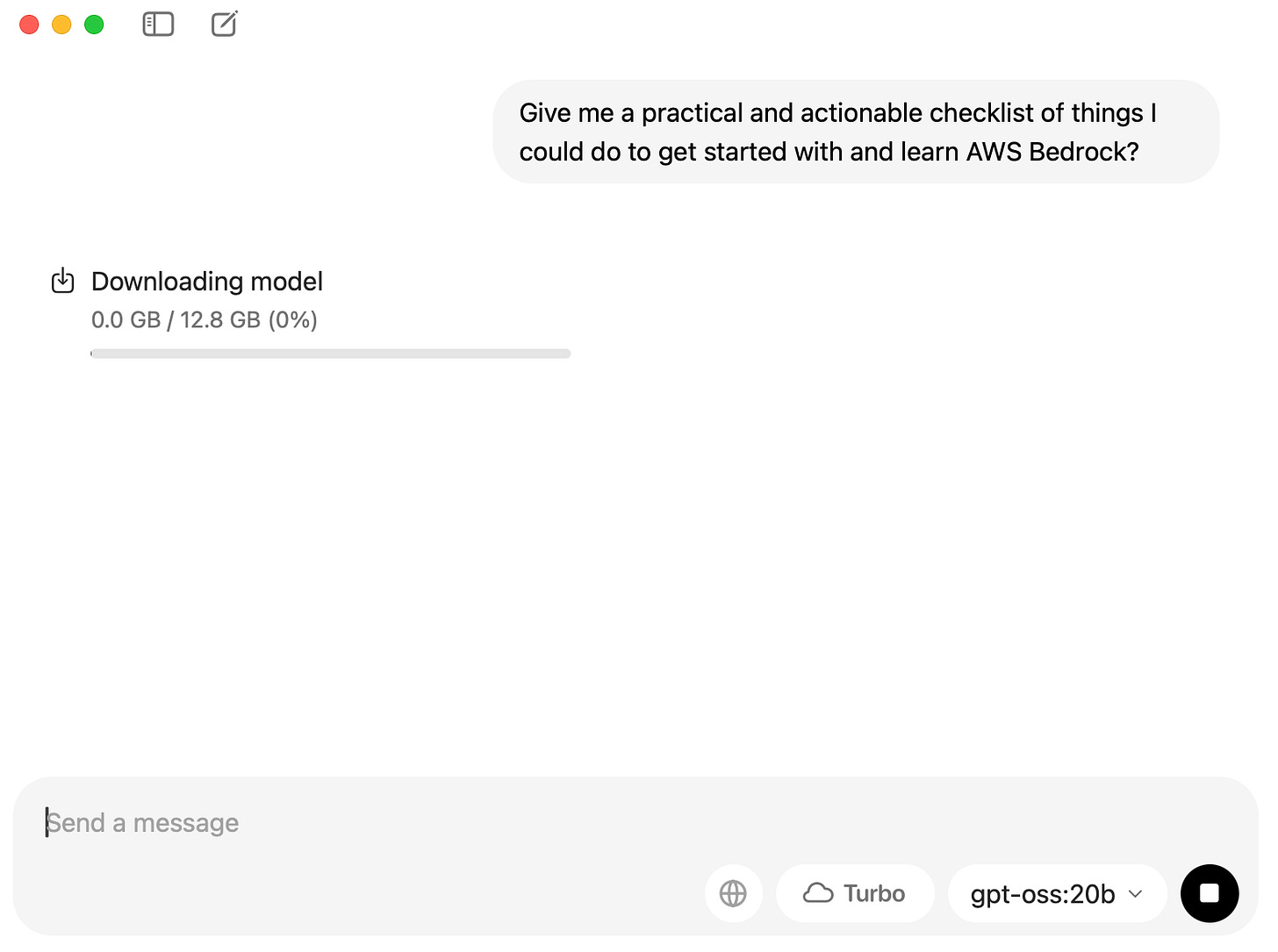

We’ll start with Ollama, a lightweight tool that makes running LLM’s locally super easy, no coding or cloud setup required.

Head over to Ollama and download:

Run through the set up wizard:

Once finished you’ll see the main Ollama window pop open

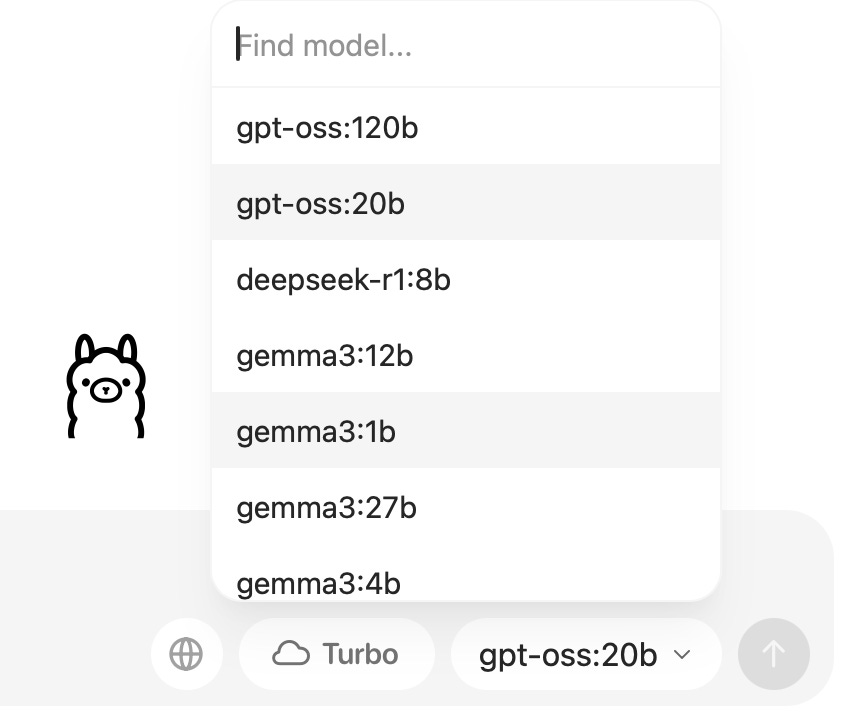

With the models you can choose from already populated

Interact and the download will begin

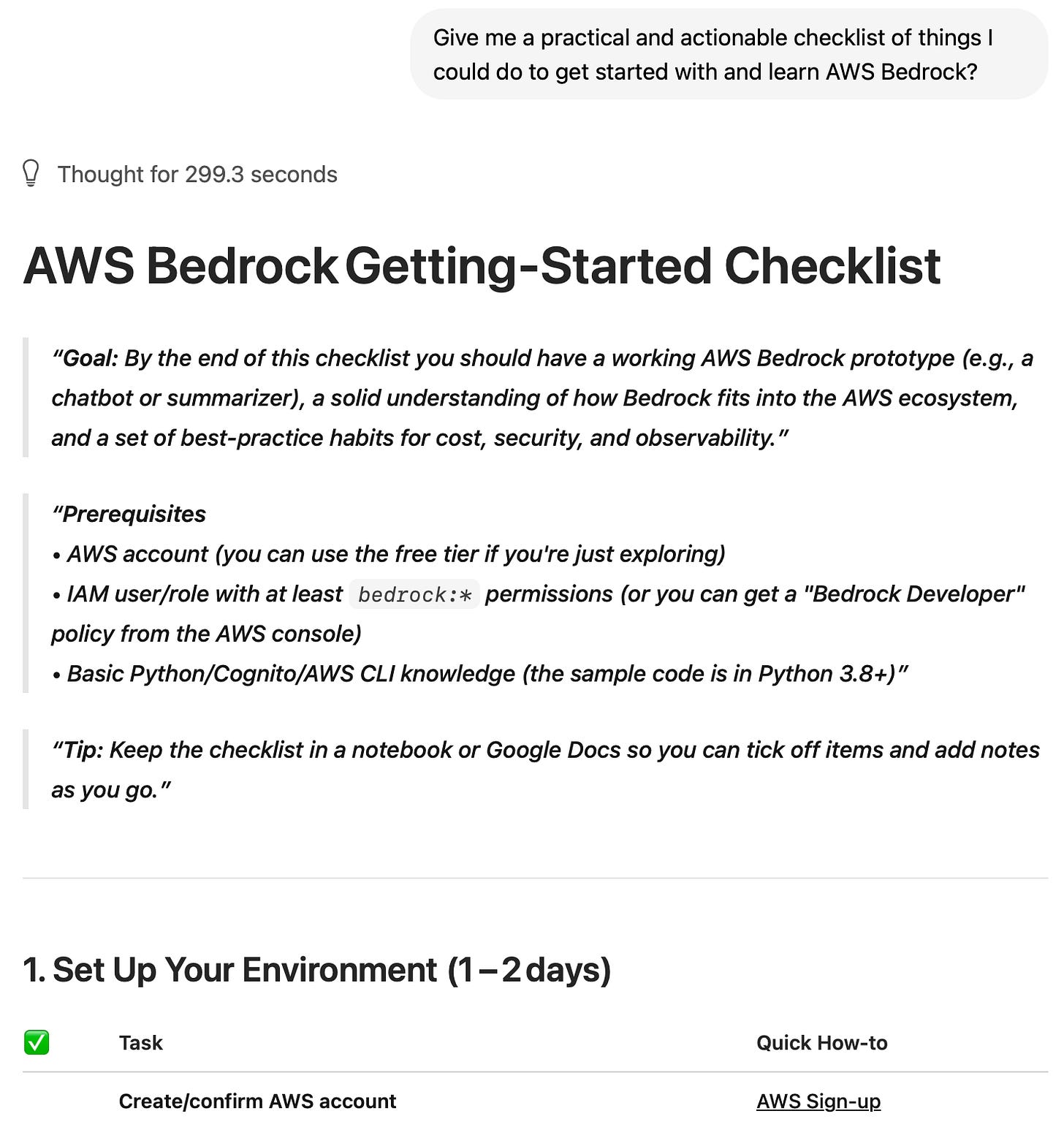

Since we’re downloading this model it of course has a cut off but we can enrich with live data from the web. In the bottom right, click the search feature. This means we get the best of both worlds: My Data stays local but I can still access relevant live information.

Just a quick one this week, real easy stuff! Nothing groundbreaking however…

Hopefully, it’s small, actionable things like this that encourage you to take it one step further. Maybe by integrating this local model into a Python script, documenting a RAG pipeline, or exploring what embeddings are.

The list of what you can explore here is endless. Let me know if you’d like me to set some of that stuff up.

WJPearce - CyberBrew

Enjoyed this? Why not check out my other reads…

Thanks a lot

Very useful this. and I very much appreciate the project work!